Close-Ended Questions

Turn scattered user opinions into measurable clusters with structured survey questions. Get a set of ready-to-use examples organized by business type.

You send out a feedback survey hoping for clusters that will guide your next product decisions. Instead, you get responses like "It's fine" or "Could be better." These vague answers leave you exactly where you started, with no clear direction on what actually needs fixing. Your team ends up making product decisions based on guesswork rather than concrete user data.

Closed-ended questions transform scattered opinions into clear, actionable data. Instead of wondering what users think, businesses can pinpoint exact pain points and measure satisfaction with precision.

Closed-ended questions limit response options to specific choices. Think multiple choice, rating scales, or yes/no answers. Unlike open-ended questions that invite rambling responses, these questions guide users toward structured feedback.

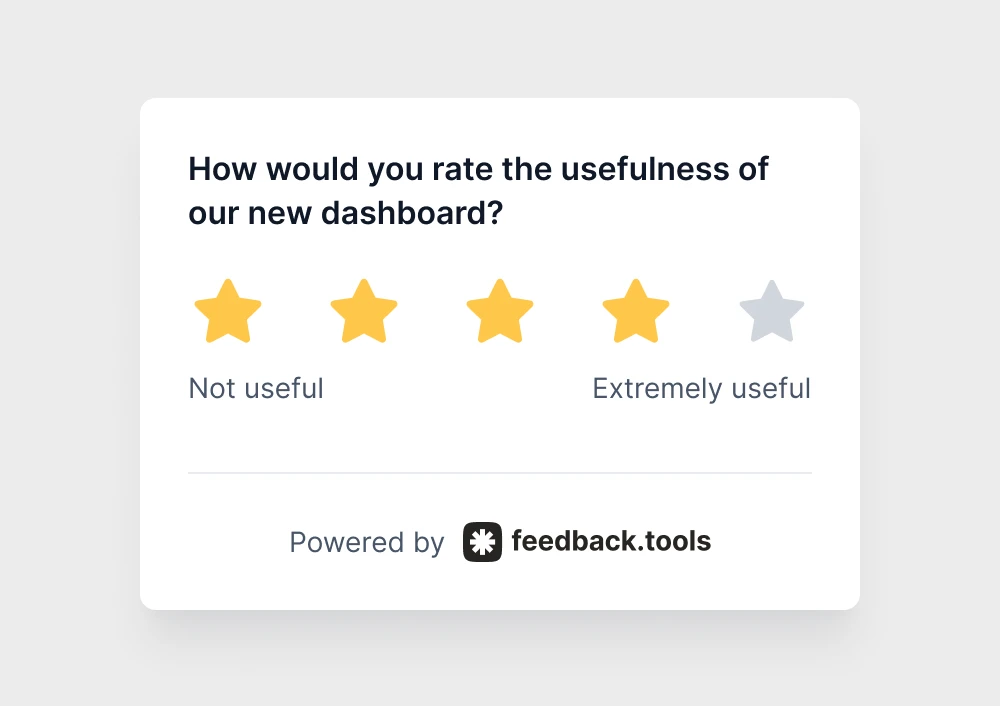

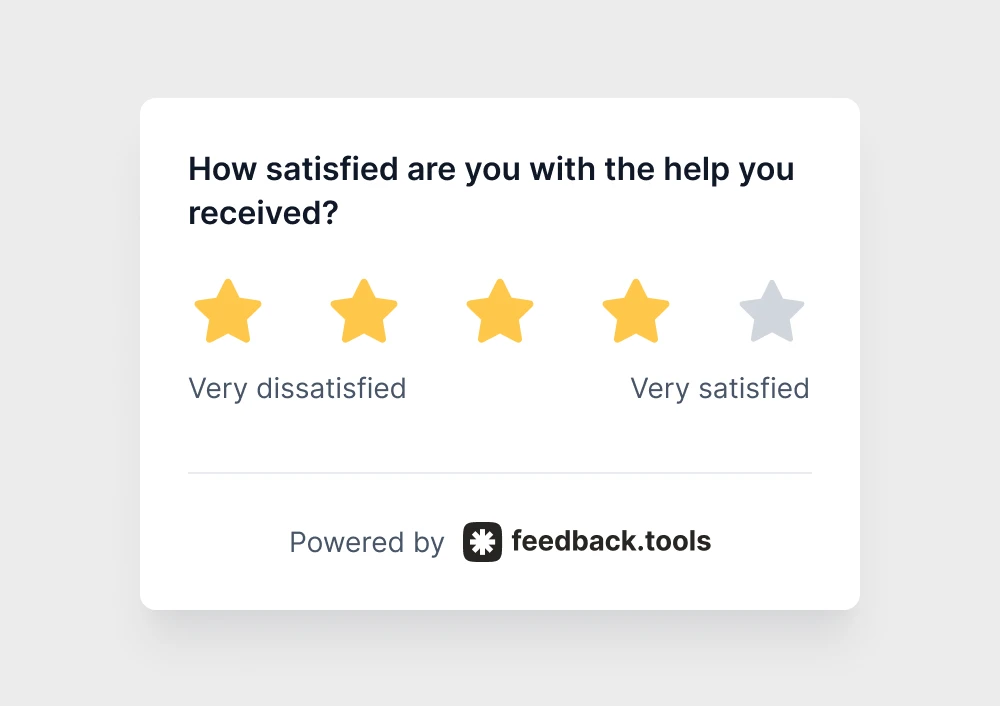

Examples of closed-ended questions:

The power lies in consistency. When 500 users answer the same structured question, patterns emerge immediately. You'll see that 70% rated checkout as confusing, or 85% want a mobile app feature.

The closed-ended advantage

Faster response rates

Users complete surveys with closed-ended questions 40% faster than open-ended alternatives. One click beats typing paragraphs every time. Higher completion rates mean more data to work with.

Progress you can measure

Tracking improvements becomes straightforward when teams ask the same structured questions monthly. Product owners can measure the impact of changes with concrete numbers rather than gut feelings.

Question types that work

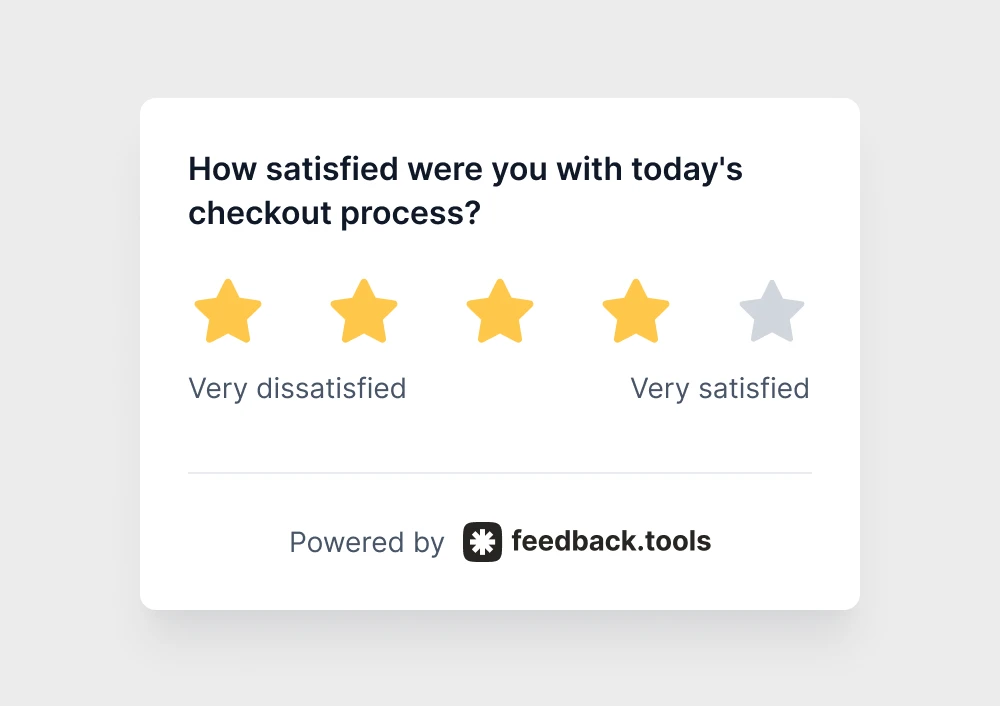

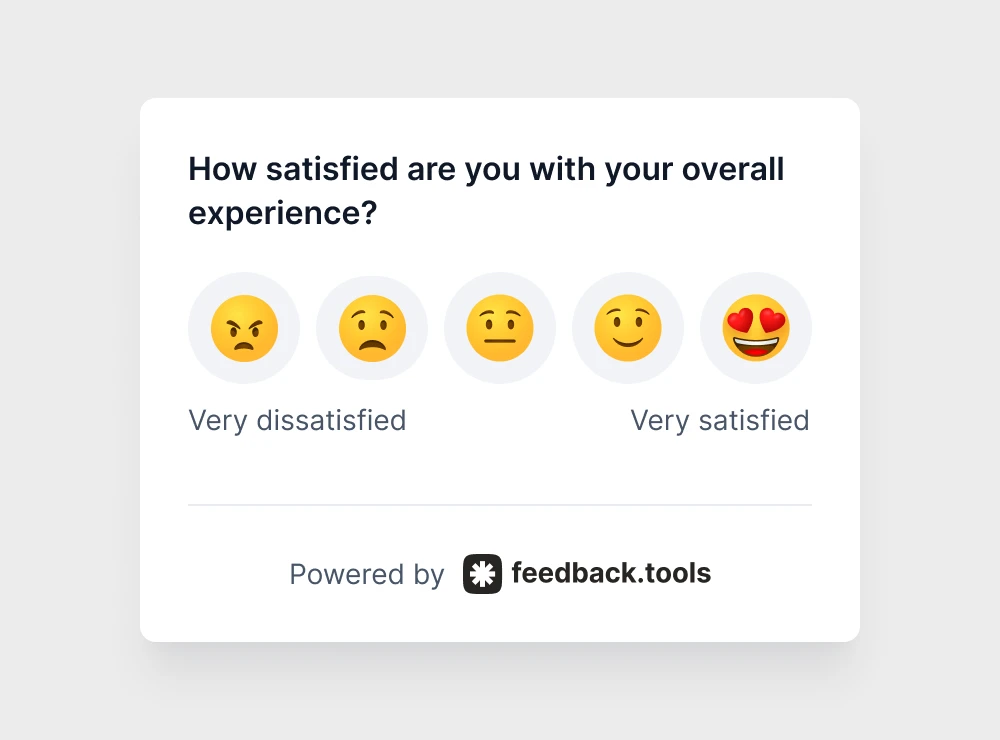

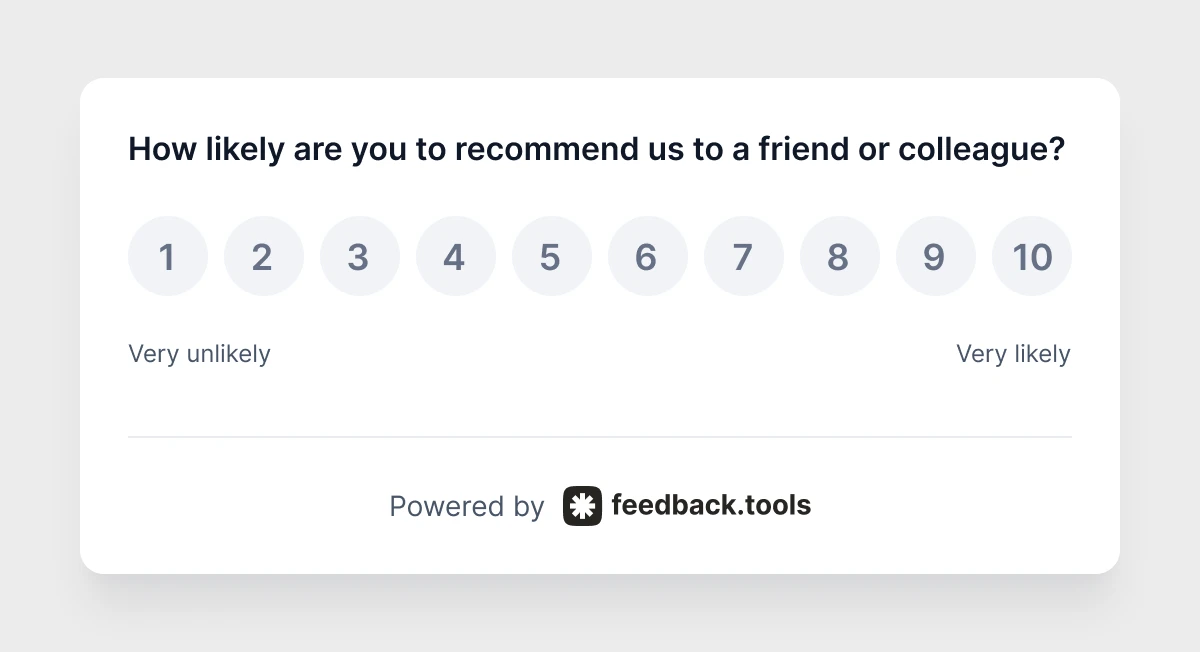

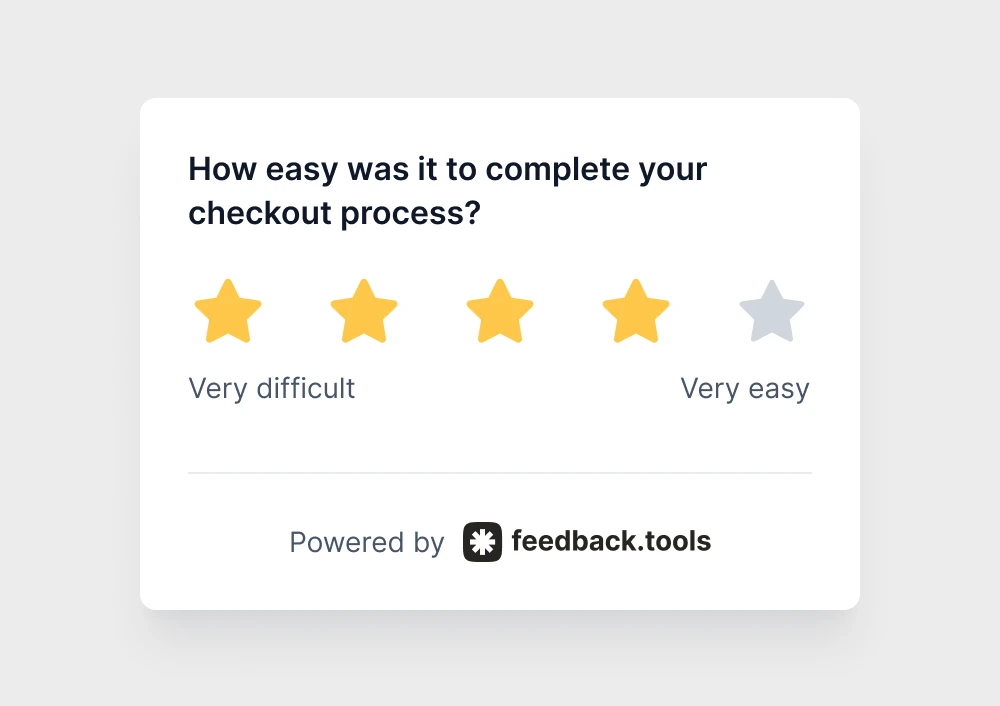

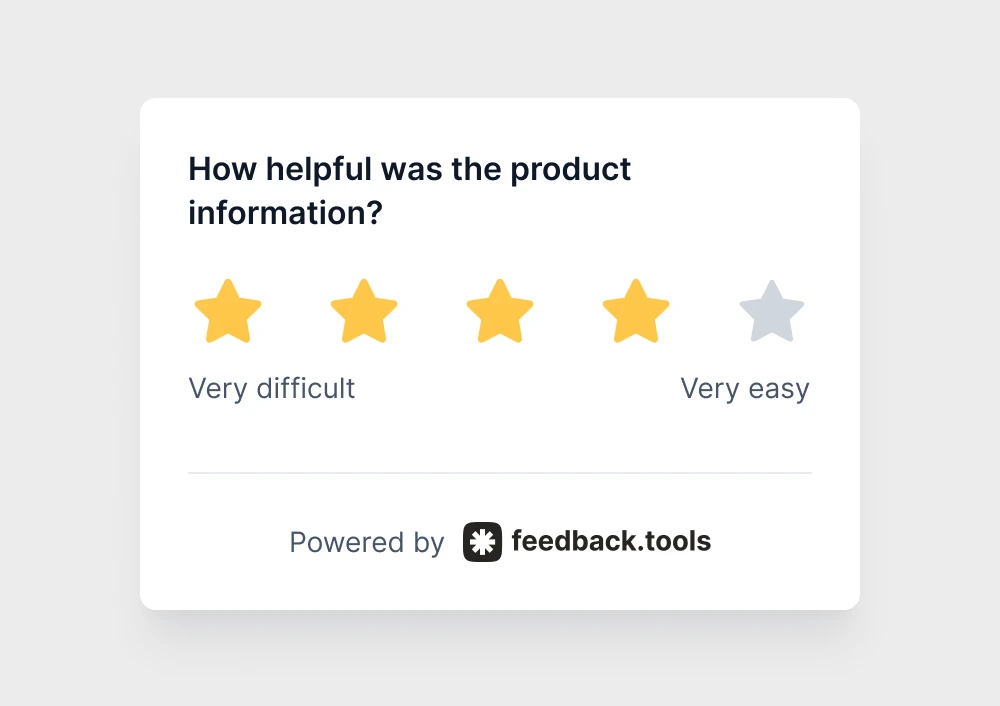

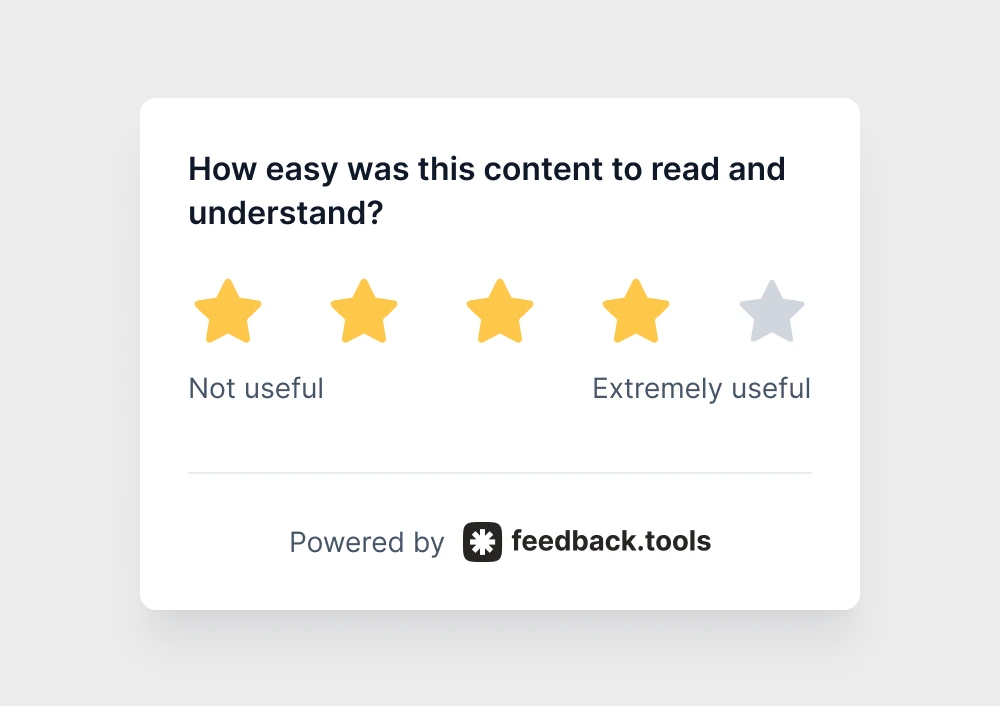

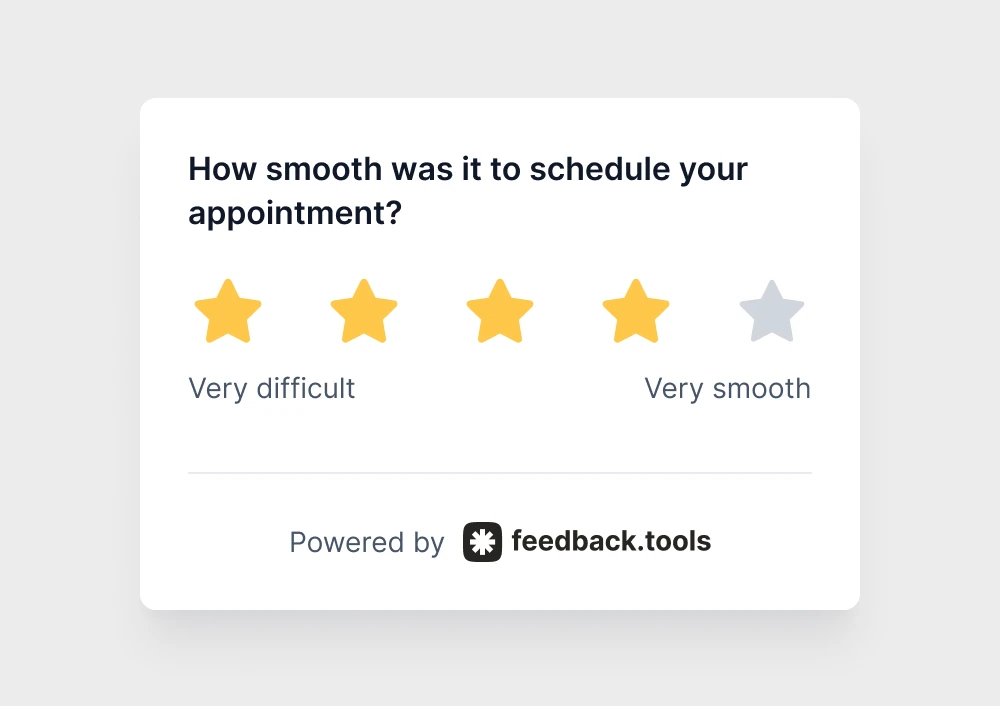

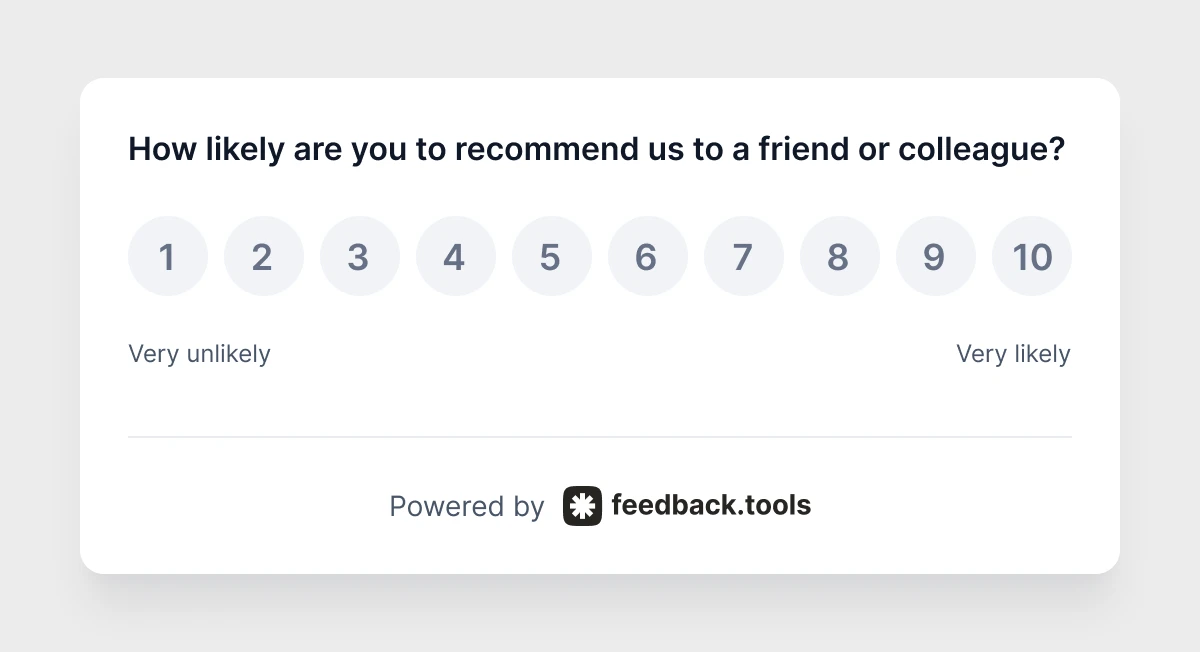

Rating scales (1-10 or 1-5)

Perfect for measuring satisfaction, difficulty, or likelihood. Users understand scales intuitively, making responses reliable and comparable. These scales power essential customer experience KPIs like CSAT, NPS, and CES.

Best for: Overall experience ratings, feature satisfaction, task difficulty, standard KPI tracking

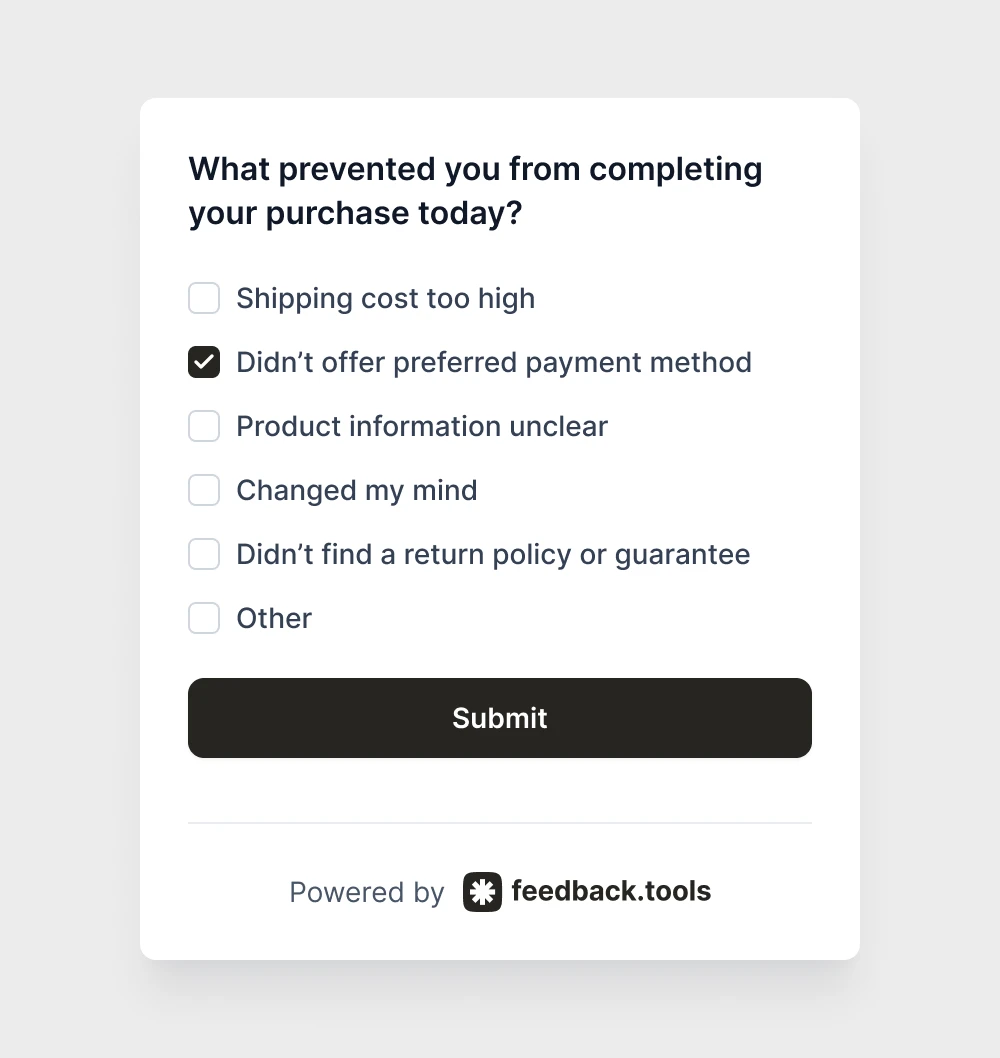

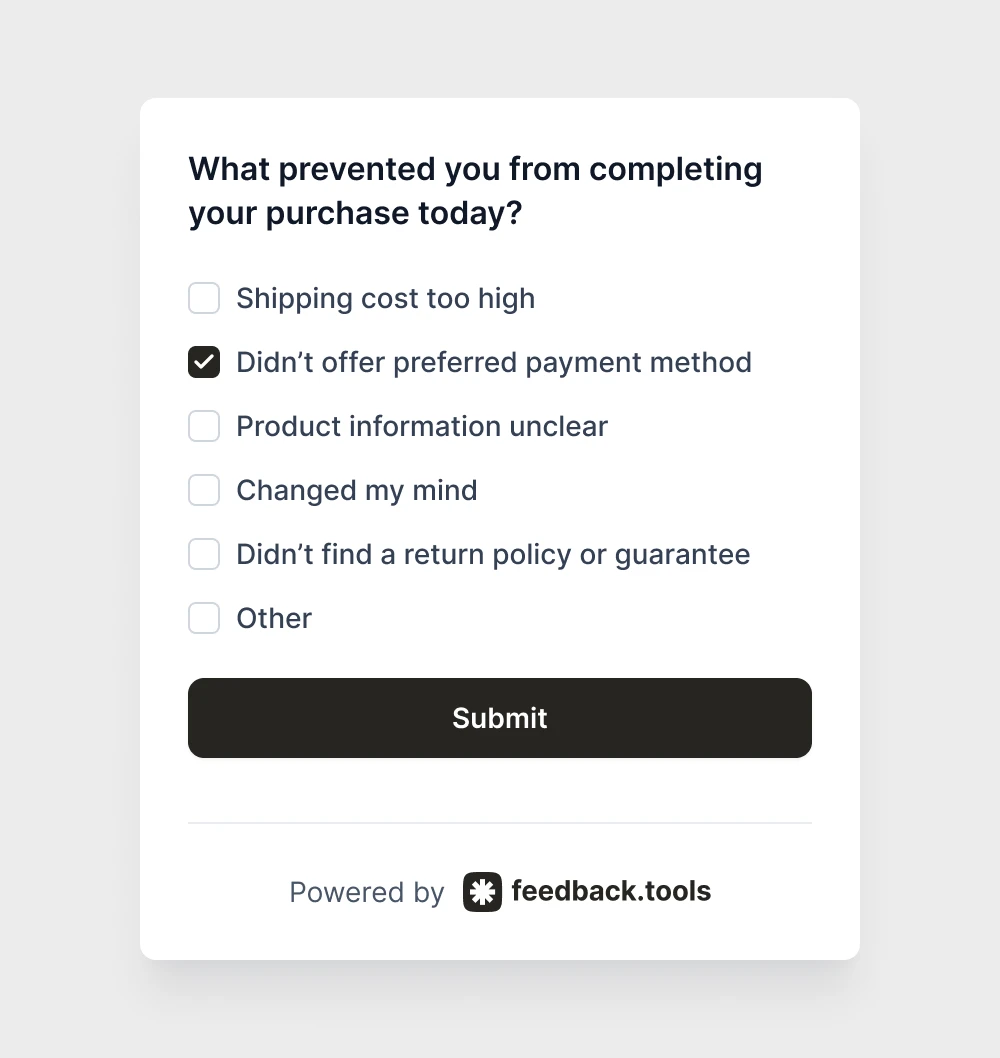

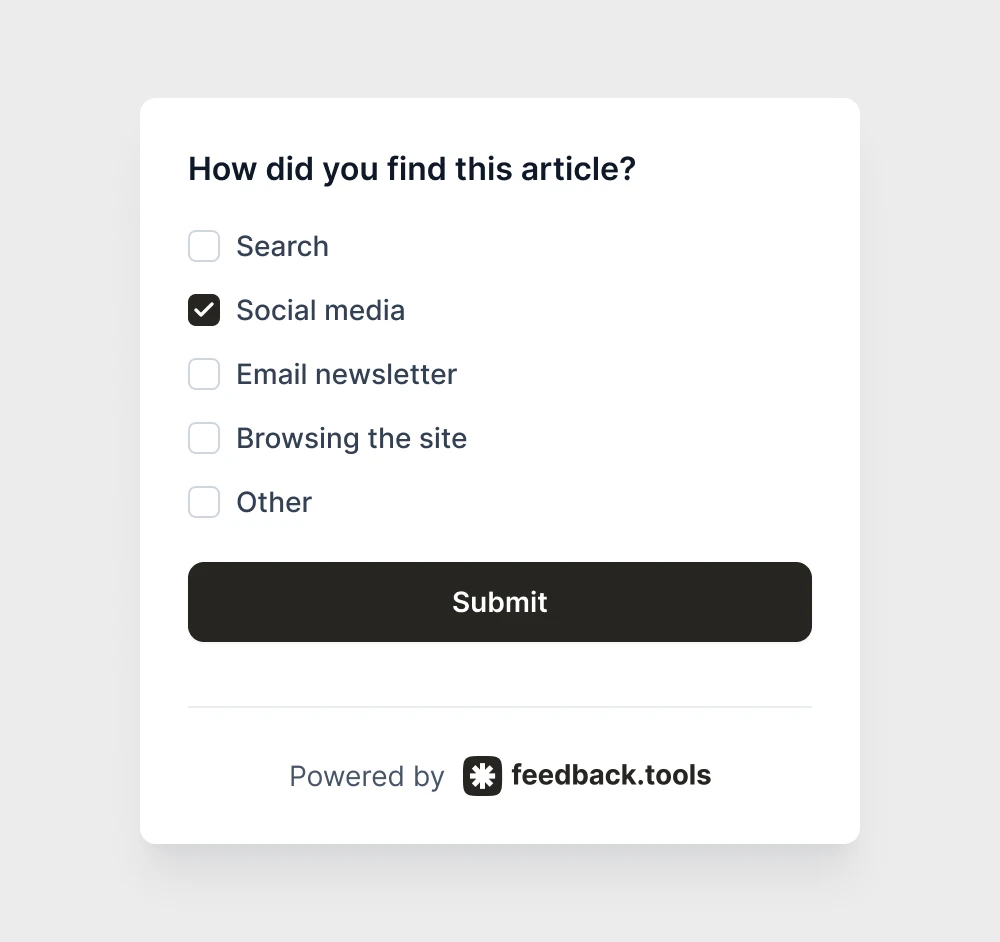

Multiple choice options

Ideal when teams want to understand preferences or identify the most common issues. Limit options to 3-7 choices for best results.

Example:

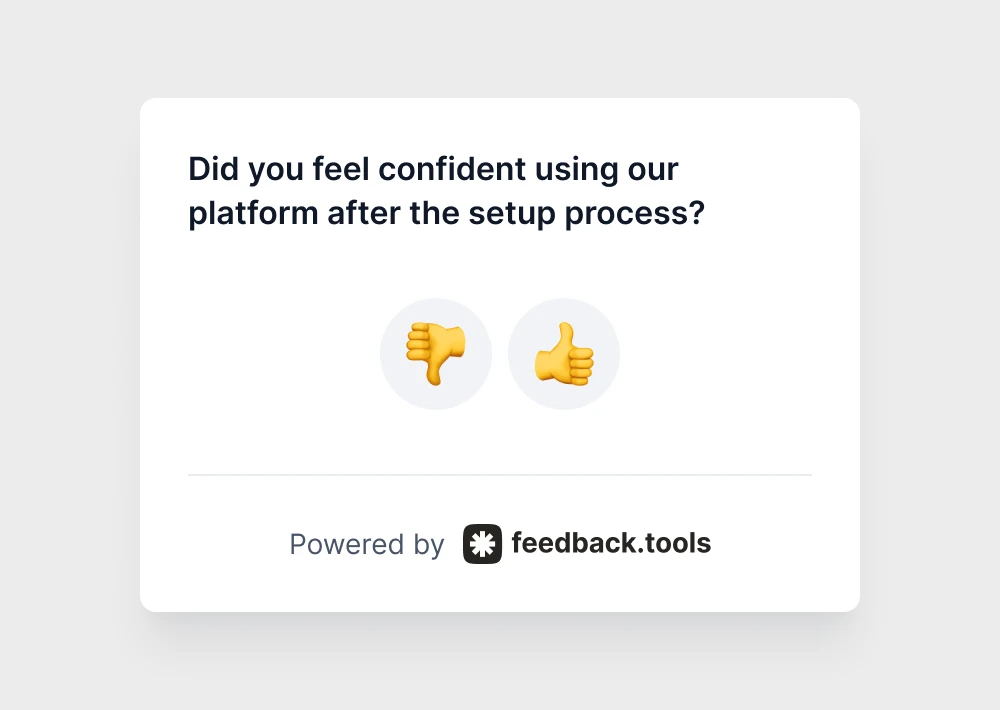

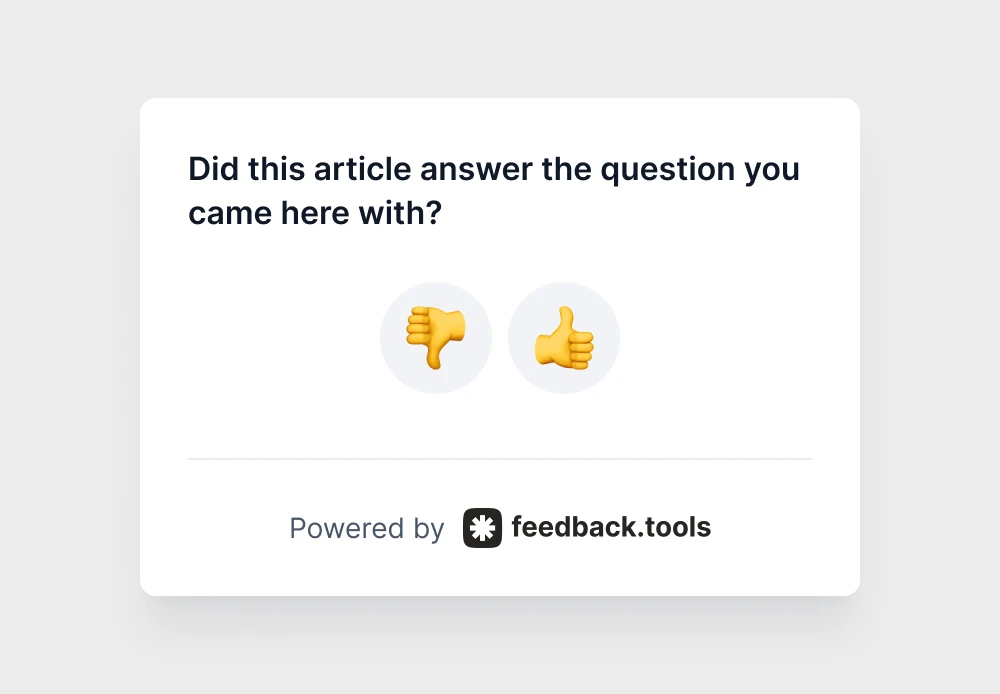

Yes/no questions

Simple but powerful for measuring completion rates, feature usage, or basic satisfaction. Great for segmenting users into different experience categories.

Best for: Task completion, feature discovery, recommendation willingness

Mistakes that kill response quality

Too many answer choices

Offering 15 multiple choice options overwhelms users. Stick to 5-7 meaningful alternatives. If you need more options, consider breaking into multiple questions.

Biased or leading options

Wrong: "How much do you love our new checkout process?" Right: "How would you rate our new checkout process?"

Neutral language produces honest feedback. Leading questions generate artificially positive responses that hide real problems.

Missing the "Other" option

Always include "Other" or "None of the above" for multiple choice questions. Forced choices create inaccurate data when users can't find their actual experience listed.

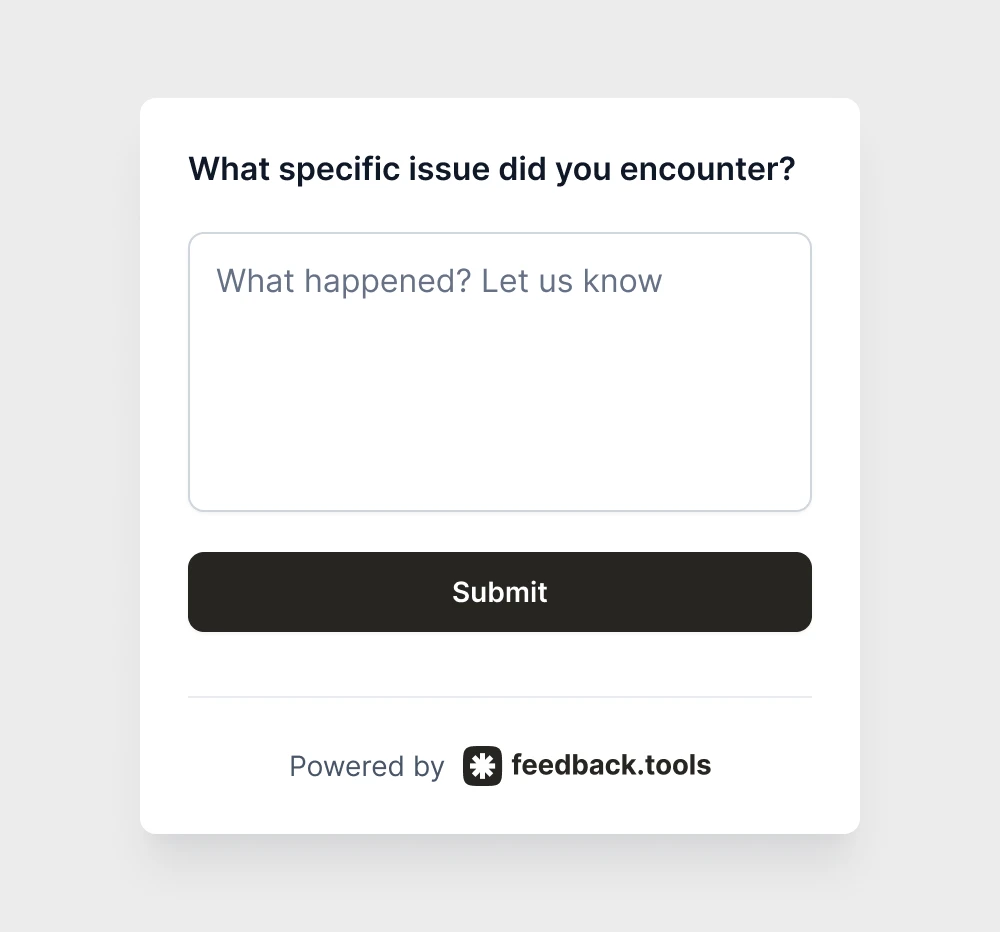

The smart hybrid approach

The most effective feedback strategies combine both question types strategically. Use closed-ended questions to identify patterns, then follow up with targeted open-ended questions for context.

Winning combination example:

This approach gives teams measurable data plus specific improvement suggestions. Users who had positive experiences skip the follow-up, while frustrated users can explain exactly what went wrong.

Smart implementation tips

Start with your biggest questions

Ask the most important questions first. Survey fatigue sets in quickly, so prioritize the feedback that impacts business decisions most.

Test questions before launch

Run new questions past 5-10 team members or beta users first. What seems clear to product owners might confuse actual users.

Ask at the right moment

Ask about checkout immediately after someone attempts to buy. Ask about search functionality right after someone uses it. Context improves response quality significantly.

Track essential KPIs

Closed-ended questions power the three most important customer experience metrics that product owners rely on for strategic decisions:

CSAT (Customer satisfaction score)

CSAT measures satisfaction with specific interactions like checkout, onboarding, or support. Track CSAT monthly to see if product improvements actually make users happier. Scores above 80% indicate strong satisfaction, while anything below 70% signals urgent attention needed.

NPS (Net promoter score)

NPS reveals customer loyalty and predicts growth potential. Users who score 9-10 are promoters, 7-8 are passives, and 0-6 are detractors. Calculate NPS by subtracting the percentage of detractors from promoters. Scores above 50 are excellent, while negative scores indicate serious problems.

CES (Customer effort score)

CES measures friction in user experiences. High-effort experiences drive customers away, even when they succeed at their task. Teams should prioritize reducing effort for processes with CES scores below 5. Small improvements in ease-of-use often deliver bigger returns than adding new features.

Survey questions by business type

Different types of businesses need different feedback approaches. Here are proven closed-ended questions organized by industry to help collect the most valuable clusters.

E-commerce & online store surveys

Product Page Feedback:

Purchase Intent:

SaaS & software product surveys

Feature Satisfaction:

Onboarding Experience:

Support Satisfaction:

Content & blog surveys

Content Usefulness:

Reading Experience:

Content Discovery:

Service business surveys

Booking Process:

Service Quality:

Turn data into decisions

Closed-ended questions excel at creating clear next steps. When teams know that 68% of users rate the mobile experience as poor, the priority becomes obvious. When 80% want a specific integration, roadmap decisions get easier.

AI analysis makes this even more powerful. Advanced feedback platforms automatically highlight trends, compare responses across time periods, and suggest which improvements could have the biggest impact.

Companies using structured feedback questions report 25% faster decision-making on product improvements. Instead of debating what users might want, teams can act on concrete evidence.

No more vague responses like "It's fine" or "Could be better."